TF-Notes: Difference between revisions

From Depth Psychology Study Wiki

SkyPanther (talk | contribs) (Created page with "== Shallow Neural Networks v.s. Deep Neural Networks == Shallow: * Consists of one or two hidden layers * Takes inputs only as vectors Deep: * Consists of three or more hidden layers * Has larger number of neurons in each layer * Takes raw data such ah images and text") |

SkyPanther (talk | contribs) mNo edit summary |

||

| Line 1: | Line 1: | ||

== Shallow Neural Networks v.s. Deep Neural Networks == | == Shallow Neural Networks v.s. Deep Neural Networks == | ||

Shallow: | '''Shallow Neural Networks:''' | ||

* | * Consist of '''one''' hidden layer (occasionally two, but rarely more). | ||

* | * Typically take inputs as '''feature vectors''', where preprocessing transforms raw data into structured inputs. | ||

Deep: | '''Deep Neural Networks:''' | ||

* | * Consist of '''three or more''' hidden layers. | ||

* | * Handle '''larger numbers of neurons''' per layer depending on model design. | ||

* | * Can process '''raw, high-dimensional data''' such as images, text, and audio directly, often using specialized architectures like Convolutional Neural Networks (CNNs) for images or Recurrent Neural Networks (RNNs) for sequences. | ||

== Why deep learning took off: Advancements in the field == | |||

* '''ReLU Activation Function:''' | |||

** Solves the '''vanishing gradient problem''', enabling the training of much deeper networks. | |||

* '''Availability of More Data:''' | |||

** Deep learning benefits from '''large datasets''' which have become accessible due to the growth of the internet, digital media, and data collection tools. | |||

* '''Increased Computational Power:''' | |||

** '''GPUs''' and specialized hardware (e.g., TPUs) allow faster training of deep models. | |||

** What once took '''days or weeks''' can now be trained in '''hours''' or '''days'''. | |||

* '''Algorithmic Innovations:''' | |||

** Advancements such as '''batch normalization''', '''dropout''', and '''better weight initialization''' have improved the stability and efficiency of training. | |||

* '''Plateau of Conventional Machine Learning Algorithms:''' | |||

** Traditional algorithms often fail to improve after a certain data or model complexity threshold. | |||

** Deep learning continues to scale with data size, improving performance as more data becomes available. | |||

In short: | |||

The success of deep learning is due to advancements in the field, the availability of large datasets, and powerful computation. | |||

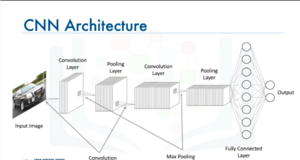

== CNNs vs. Traditional Neural Networks: == | |||

* CNNs are considered deep neural networks. They are specialized architectures designed for tasks involving grid-like data, such as images and videos. Unlike traditional fully connected networks, CNNs utilize '''convolutions''' to detect spatial hierarchies in data. This enables them to efficiently capture patterns and relationships, making them better suited for image and visual data processing. '''Key Features of CNNs:''' | |||

** '''Input as Images:''' CNNs directly take images as input, which allows them to process raw pixel data without extensive manual feature engineering. | |||

** '''Efficiency in Training:''' By leveraging properties such as local receptive fields, parameter sharing, and spatial hierarchies, CNNs make the training process computationally efficient compared to fully connected networks. | |||

** '''Applications:''' CNNs excel at solving problems in '''image recognition''', '''object detection''', '''segmentation''', and other computer vision tasks. | |||

[[File:CNN Architecture.png|thumb]] | |||

=== '''Convolutional Layers and ReLU''' === | |||

* '''Convolutional Layers''' apply a '''filter (kernel)''' over the input data to extract features such as edges, textures, or patterns. | |||

* After the convolution operation, the resulting feature map undergoes a '''non-linear activation function''', commonly '''ReLU (Rectified Linear Unit)'''. | |||

=== '''Pooling Layers''' === | |||

Pooling layers are used to '''down-sample''' the feature map, reducing its size while retaining important features. | |||

==== '''Why Use Pooling?''' ==== | |||

* Reduces '''dimensionality''', decreasing computation and preventing overfitting. | |||

* Keeps the most significant information from the feature map. | |||

* Increases the '''spatial invariance''' of the network (i.e., the ability to recognize patterns regardless of location). | |||

== Keras Code == | |||

model = Sequential() | |||

input_shape = (128, 128, 3) | |||

model.add(Conv2D(16, kernel_size = (2, 2), strides=(1,1), activation='relu', input_shape= input_shape)) | |||

model.add(MaxPooling2D(pool_size=(2,2), strides=(2,2))) | |||

model.add(Conv2D(32, kernel_size = (2, 2), strides=(1,1), activation='relu')) | |||

model.add(MaxPooling2D(pool_size=(2,2))) | |||

model.add(Flatten()) | |||

model.add(Dense(100, activation='relu')) | |||

model.add(Dense(num_classes, activation='softmax')) | |||

Revision as of 23:00, 22 January 2025

Shallow Neural Networks v.s. Deep Neural Networks

Shallow Neural Networks:

- Consist of one hidden layer (occasionally two, but rarely more).

- Typically take inputs as feature vectors, where preprocessing transforms raw data into structured inputs.

Deep Neural Networks:

- Consist of three or more hidden layers.

- Handle larger numbers of neurons per layer depending on model design.

- Can process raw, high-dimensional data such as images, text, and audio directly, often using specialized architectures like Convolutional Neural Networks (CNNs) for images or Recurrent Neural Networks (RNNs) for sequences.

Why deep learning took off: Advancements in the field

- ReLU Activation Function:

- Solves the vanishing gradient problem, enabling the training of much deeper networks.

- Availability of More Data:

- Deep learning benefits from large datasets which have become accessible due to the growth of the internet, digital media, and data collection tools.

- Increased Computational Power:

- GPUs and specialized hardware (e.g., TPUs) allow faster training of deep models.

- What once took days or weeks can now be trained in hours or days.

- Algorithmic Innovations:

- Advancements such as batch normalization, dropout, and better weight initialization have improved the stability and efficiency of training.

- Plateau of Conventional Machine Learning Algorithms:

- Traditional algorithms often fail to improve after a certain data or model complexity threshold.

- Deep learning continues to scale with data size, improving performance as more data becomes available.

In short:

The success of deep learning is due to advancements in the field, the availability of large datasets, and powerful computation.

CNNs vs. Traditional Neural Networks:

- CNNs are considered deep neural networks. They are specialized architectures designed for tasks involving grid-like data, such as images and videos. Unlike traditional fully connected networks, CNNs utilize convolutions to detect spatial hierarchies in data. This enables them to efficiently capture patterns and relationships, making them better suited for image and visual data processing. Key Features of CNNs:

- Input as Images: CNNs directly take images as input, which allows them to process raw pixel data without extensive manual feature engineering.

- Efficiency in Training: By leveraging properties such as local receptive fields, parameter sharing, and spatial hierarchies, CNNs make the training process computationally efficient compared to fully connected networks.

- Applications: CNNs excel at solving problems in image recognition, object detection, segmentation, and other computer vision tasks.

Convolutional Layers and ReLU

- Convolutional Layers apply a filter (kernel) over the input data to extract features such as edges, textures, or patterns.

- After the convolution operation, the resulting feature map undergoes a non-linear activation function, commonly ReLU (Rectified Linear Unit).

Pooling Layers

Pooling layers are used to down-sample the feature map, reducing its size while retaining important features.

Why Use Pooling?

- Reduces dimensionality, decreasing computation and preventing overfitting.

- Keeps the most significant information from the feature map.

- Increases the spatial invariance of the network (i.e., the ability to recognize patterns regardless of location).

Keras Code

model = Sequential()

input_shape = (128, 128, 3)

model.add(Conv2D(16, kernel_size = (2, 2), strides=(1,1), activation='relu', input_shape= input_shape))

model.add(MaxPooling2D(pool_size=(2,2), strides=(2,2)))

model.add(Conv2D(32, kernel_size = (2, 2), strides=(1,1), activation='relu'))

model.add(MaxPooling2D(pool_size=(2,2)))

model.add(Flatten())

model.add(Dense(100, activation='relu'))

model.add(Dense(num_classes, activation='softmax'))