TF-Notes: Difference between revisions

SkyPanther (talk | contribs) |

SkyPanther (talk | contribs) |

||

| Line 5,237: | Line 5,237: | ||

* Use '''target network''': | * Use '''target network''': | ||

** Maintain a separate Q-network for stable training. | ** Maintain a separate Q-network for stable training. | ||

** Update the target network periodically. | ** Update the target network periodically(Less frequently than the primary Q-network). | ||

* Use the '''Mean Squared Error (MSE)''' loss function with '''Adam optimizer'''. | * Use the '''Mean Squared Error (MSE)''' loss function with '''Adam optimizer'''. | ||

----episodes = 100 | ----episodes = 100 | ||

Latest revision as of 22:52, 8 February 2025

Shallow Neural Networks v.s. Deep Neural Networks

Shallow Neural Networks:

- Consist of one hidden layer (occasionally two, but rarely more).

- Typically take inputs as feature vectors, where preprocessing transforms raw data into structured inputs.

Deep Neural Networks:

- Consist of three or more hidden layers.

- Handle larger numbers of neurons per layer depending on model design.

- Can process raw, high-dimensional data such as images, text, and audio directly, often using specialized architectures like Convolutional Neural Networks (CNNs) for images or Recurrent Neural Networks (RNNs) for sequences.

Why deep learning took off: Advancements in the field

- ReLU Activation Function:

- Solves the vanishing gradient problem, enabling the training of much deeper networks.

- Availability of More Data:

- Deep learning benefits from large datasets which have become accessible due to the growth of the internet, digital media, and data collection tools.

- Increased Computational Power:

- GPUs and specialized hardware (e.g., TPUs) allow faster training of deep models.

- What once took days or weeks can now be trained in hours or days.

- Algorithmic Innovations:

- Advancements such as batch normalization, dropout, and better weight initialization have improved the stability and efficiency of training.

- Plateau of Conventional Machine Learning Algorithms:

- Traditional algorithms often fail to improve after a certain data or model complexity threshold.

- Deep learning continues to scale with data size, improving performance as more data becomes available.

In short:

The success of deep learning is due to advancements in the field, the availability of large datasets, and powerful computation.

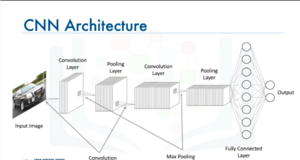

CNNs (supervised tasks) vs. Traditional Neural Networks:

- CNNs are considered deep neural networks. They are specialized architectures designed for tasks involving grid-like data, such as images and videos. Unlike traditional fully connected networks, CNNs utilize convolutions to detect spatial hierarchies in data. This enables them to efficiently capture patterns and relationships, making them better suited for image and visual data processing. Key Features of CNNs:

- Input as Images: CNNs directly take images as input, which allows them to process raw pixel data without extensive manual feature engineering.

- Efficiency in Training: By leveraging properties such as local receptive fields, parameter sharing, and spatial hierarchies, CNNs make the training process computationally efficient compared to fully connected networks.

- Applications: CNNs excel at solving problems in image recognition, object detection, segmentation, and other computer vision tasks.

Convolutional Layers and ReLU

- Convolutional Layers apply a filter (kernel) over the input data to extract features such as edges, textures, or patterns.

- After the convolution operation, the resulting feature map undergoes a non-linear activation function, commonly ReLU (Rectified Linear Unit).

Pooling Layers

Pooling layers are used to down-sample the feature map, reducing its size while retaining important features.

Why Use Pooling?

- Reduces dimensionality, decreasing computation and preventing overfitting.

- Keeps the most significant information from the feature map.

- Increases the spatial invariance of the network (i.e., the ability to recognize patterns regardless of location).

Keras Code

One Set of Convolutional and Pooling Layers:

model = Sequential()

model.add(Input(shape=(28, 28, 1)))

model.add(Conv2D(16, (5, 5), strides=(1, 1), activation='relu'))

model.add(MaxPooling2D(pool_size=(2, 2), strides=(2, 2)))

model.add(Flatten())

model.add(Dense(100, activation='relu'))

model.add(Dense(num_classes, activation='softmax'))

# compile model

model.compile(optimizer='adam', loss='categorical_crossentropy', metrics=['accuracy'])

Two Sets of Convolutional and Pooling Layers:

model = Sequential()

model.add(Input(shape=(28, 28, 1)))

model.add(Conv2D(16, (5, 5), activation='relu'))

model.add(MaxPooling2D(pool_size=(2, 2), strides=(2, 2)))

model.add(Conv2D(8, (2, 2), activation='relu'))

model.add(MaxPooling2D(pool_size=(2, 2), strides=(2, 2)))

model.add(Flatten())

model.add(Dense(100, activation='relu'))

model.add(Dense(num_classes, activation='softmax'))

# Compile model

model.compile(optimizer='adam', loss='categorical_crossentropy', metrics=['accuracy'])

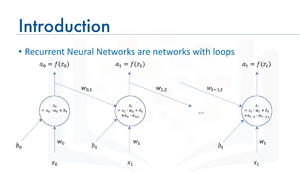

Recurrent Neural Networks (supervised tasks)

- Recurrent Neural Networks (RNNs) are a class of neural networks specifically designed to handle sequential data. They have loops that allow information to persist, meaning they don't just take the current input at each time step but also consider the output (or hidden state) from the previous time step. This enables them to process data where the order and context of inputs matter, such as time series, text, or audio.

How RNNs Work:

- Sequential Data Processing:

- At each time step t, the network takes:

- The current input xt,

- A bias bt,

- And the hidden state (output) from the previous time step, at−1.

- These are combined to compute the current hidden state at.

- At each time step t, the network takes:

- Recurrent Connection:

- The feedback loop, as shown in the diagram, allows information to persist across time steps. This is the "memory" mechanism of RNNs.

- The connection between at−1 (previous state) and zt (current computation) captures temporal dependencies in sequential data.

- Activation Function:

- The raw output zt passes through a non-linear activation function f (commonly tanh or ReLU) to compute at, the current output or hidden state.

Long Short-Term Memory (LSTM) Model

Popular RNN variant (LSTM for short)

LSTMs are a type of Recurrent Neural Network (RNN) that solve the vanishing gradient problem, allowing them to capture long-term dependencies in sequential data. They achieve this through a memory cell and a system of gates that regulate the flow of information.

Applications Include:

- Image Generation:

- LSTMs generate images pixel by pixel or feature by feature.

- Often combined with Convolutional Neural Networks (CNNs) for spatial feature extraction.

- Handwriting Generation:

- Learn sequences of strokes and predict future strokes to generate realistic handwriting.

- Can mimic human writing styles given text input.

- Automatic Captioning for Images and Videos:

- Combines CNNs (for extracting visual features) with LSTMs (for generating captions).

- Example: "A group of people playing soccer in a field."

Key Features of LSTMs

- Memory Cell:

- Stores information for long durations, addressing the short-term memory limitations of standard RNNs.

- Gating Mechanism:

- Forget Gate: Decides which parts of the memory to discard.

- Input Gate: Determines what new information to add to the memory.

- Output Gate: Controls what part of the memory is output at the current step.

- Handles Long-Term Dependencies:

- LSTMs can maintain context over hundreds of time steps, making them suitable for tasks like text generation, speech synthesis, and video processing.

- Flexible Sequence Modeling:

- Suitable for variable-length input sequences, such as sentences, time-series data, or video frames.

Additional Notes

- Variants:

- GRUs (Gated Recurrent Units): A simplified version of LSTMs with fewer parameters, often faster to train but sometimes less effective for longer dependencies.

- Bi-directional LSTMs: Process sequences in both forward and backward directions, capturing past and future context simultaneously.

- Use Cases Beyond the Above:

- Speech Recognition: Transcribe spoken words into text.

- Music Generation: Compose music by predicting the sequence of notes.

- Anomaly Detection: Detect unusual patterns in time-series data, like network intrusion or equipment failure.

- Challenges:

- LSTMs are computationally more expensive compared to simpler RNNs.

- They require careful hyperparameter tuning (e.g., learning rate, number of layers, units per layer).

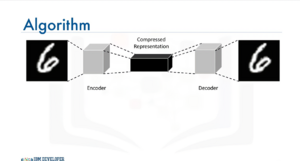

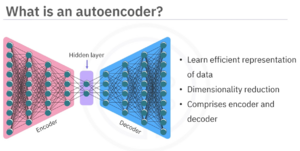

Autoencoders (unsupervised learning)

Autoencoding is a type of data compression algorithm where the compression (encoding) and decompression (decoding) functions are learned automatically from the data, typically using neural networks.

Key Features of Autoencoders

- Unsupervised Learning:

- Autoencoders are unsupervised neural network models.

- They use backpropagation, but the input itself acts as the output label during training.

- Data-Specific:

- Autoencoders are specialized for the type of data they are trained on and may not generalize well to data that is significantly different.

- Nonlinear Transformations:

- Autoencoders can learn nonlinear representations, which makes them more powerful than techniques like Principal Component Analysis (PCA) that can only handle linear transformations.

- Applications:

- Data Denoising: Removing noise from images, audio, or other data.

- Dimensionality Reduction: Reducing the number of features in data while preserving key information, often used for visualization or preprocessing.

Types of Autoencoders

- Standard Autoencoders:

- Composed of an encoder-decoder structure with a bottleneck layer for compressed representation.

- Denoising Autoencoders:

- Designed to reconstruct clean data from noisy inputs.

- Variational Autoencoders (VAEs):

- A probabilistic extension of autoencoders that can generate new data points similar to the training data.

- Sparse Autoencoders:

- Include a sparsity constraint to learn compressed representations efficiently.

Clarifications on Specific Points

- Restricted Boltzmann Machines (RBMs):

- While RBMs are related to autoencoders, they are not the same.

- RBMs are generative models used for pretraining deep networks, and they are not autoencoders.

- A more closely related concept is Deep Belief Networks (DBNs), which combine stacked RBMs.

- Fixing Imbalanced Datasets:

- While autoencoders can be used to oversample minority classes by learning the structure of the minority class and generating synthetic samples, this is not their primary application.

Applications of Autoencoders

- Data Denoising:

- Remove noise from images, audio, or other forms of data.

- Dimensionality Reduction:

- Learn compressed representations for data visualization or feature extraction.

- Estimating Missing Values:

- Reconstruct missing data by leveraging patterns in the dataset.

- Automatic Feature Extraction:

- Extract meaningful features from unstructured data like text, images, or time-series data.

- Anomaly Detection:

- Learn normal patterns in the data and identify deviations, useful for fraud detection, network intrusion, or manufacturing defect identification.

- Data Generation:

- Variational autoencoders can generate new, similar data points, such as synthetic images.

Keras Advanced Features

- Sequential API: Used for simple, linear stacks of layers.

- Functional API: Designed for more flexibility and control, enabling the creation of intricate models such as:

- Models with multiple inputs and outputs.

- Shared layers that can be reused.

- Models with non-sequential data flows, like multi-branch or residual networks.

Advantages of the Functional API:

- Flexibility: Enables construction of complex architectures like multi-branch and hierarchical models.

- Clarity: Provides an explicit and clear representation of the model structure.

- Reusability: Layers or models can be reused across different parts of the architecture.

Real-World Applications:

- Healthcare:

- Medical image analysis for disease detection (e.g., pneumonia detection from chest X-rays).

- Finance:

- Predicting market trends using time-series data.

- Autonomous Driving:

- Object detection for pedestrians or vehicles.

- Lane detection for road safety systems.

Keras Functional API and Subclassing API

from tensorflow.keras.models import Model

from tensorflow.keras.layers import Input, Dense

- Define the input

inputs = Input(shape=(784,))

- Define the layers

x = Dense(64, activation='relu')(inputs)

outputs = Dense(10, activation='softmax')(x)

- Create the model

model = Model(inputs=inputs, outputs=outputs)

model.summary()

Handling multiple inputs and outputs

from tensorflow.keras.models import Model

from tensorflow.keras.layers import Input, Dense, concatenate

# Define two sets of inputs

inputA = Input(shape=(64,))

inputB = Input(shape=(128,))

# The first branch operates on the first input

x = Dense(8, activation='relu')(inputA)

x = Dense(4, activation='relu')(x)

# The second branch operates on the second input

y = Dense(16, activation='relu')(inputB)

y = Dense(4, activation='relu')(y)

# Combine the outputs of the two branches

combined = concatenate([x, y])

# Add fully connected (FC) layers and a regression output

z = Dense(2, activation='relu')(combined)

z = Dense(1, activation='linear')(z)

# Create the model

model = Model(inputs=[inputA, inputB], outputs=z)

model.summary()

from tensorflow.keras.models import Model

from tensorflow.keras.layers import Input, Dense, Lambda

# Define the input layer

input = Input(shape=(28, 28, 1))

# Define a shared convolutional base

conv_base = Dense(64, activation='relu')

# Process the input through the shared layer

processed_1 = conv_base(input)

processed_2 = conv_base(input)

# Create a model using the shared layer

model = Model(inputs=input, outputs=[processed_1,processed_2])

model.summary()

Practical example: Implementing a complex model

from tensorflow.keras.models import Model

from tensorflow.keras.layers import Input, Dense, Lambda, Conv2D, MaxPooling2D, Flatten

from tensorflow.keras.activations import relu, linear

# First input model

inputA = Input(shape=(32, 32, 1))

x = Conv2D(32, (3, 3), activation=relu)(inputA)

x = MaxPooling2D((2, 2))(x)

x = Flatten()(x)

x = Model(inputs=inputA, outputs=x)

# Second input model

inputB = Input(shape=(32, 32, 1))

y = Conv2D(32, (3, 3), activation=relu)(inputB)

y = MaxPooling2D((2, 2))(y)

y = Flatten()(y)

y = Model(inputs=inputB, outputs=y)

# Combine the outputs of the two branches

combined = concatenate([x.output, y.output])

# Add fully connected (FC) layers and a regression output

z = Dense(64, activation='relu')(combined)

z = Dense(1, activation='linear')(z)

# Create the model

model = Model(inputs=[x.input, y.input], outputs=z)

model.summary()

Keras subclassing API

- Offers flexibility

- Defines custom and dynamic models

- Implements subclassing of the model class and call method

- Used in research and development for sturom training loops and non standard architectures.

import tensorflow as tf

# Define your model by subclassing

class MyModel(tf.keras.Model):

def __init__(self):

super(MyModel, self).__init__()

# Define layers

self.dense1 = tf.keras.layers.Dense(64, activation='relu')

self.dense2 = tf.keras.layers.Dense(10, activation='softmax')

def call(self, inputs):

# Forward pass

x = self.dense1(inputs)

y = self.dense2(x)

return y

# Instantiate the model

model = MyModel()

model.summary()

# Define loss function

loss_fn = tf.keras.losses.SparseCategoricalCrossentropy()

optimizer = tf.keras.optimizers.Adam()

# Compile the model

model.compile(optimizer=optimizer, loss=loss_fn, metrics=['accuracy'])

Use Cases for the Keras Subclassing API:

- Models with Dynamic Architecture:

- The Subclassing API is ideal for creating models with architectures that vary dynamically based on the input or intermediate computations.

- Example: Recurrent neural networks (RNNs) with variable sequence lengths or architectures that depend on runtime conditions.

- Custom Training Loops:

- The Subclassing API offers fine-grained control over the training process, allowing you to implement custom training loops using

GradientTape. - Example: Adversarial training (e.g., GANs) or reinforcement learning models where standard

.fit()may not suffice.

- The Subclassing API offers fine-grained control over the training process, allowing you to implement custom training loops using

- Research and Prototyping:

- It is particularly suited for rapid experimentation in research scenarios, enabling you to define and test novel model architectures.

- Example: Exploring new layer types, loss functions, or combining multiple types of inputs and outputs.

- Dynamic Graphs:

- The Subclassing API allows you to implement eager execution with dynamic computation graphs, offering flexibility to perform different operations at each step of the forward pass.

- Example: Models that use conditional logic or operations like

if/elseduring the forward pass, such as graph neural networks or decision-tree-inspired neural networks.

Dropout Layers

Dropout is a regularization technique that randomly zeroes out a fraction of neuron outputs during each training step. This reduces the network’s reliance on specific neurons and fosters more robust feature learning—helping mitigate overfitting.

Training vs. Inference

- Dropout is active only during training, where it stochastically drops neurons.

- At inference (testing/prediction), dropout is effectively disabled (or rescaled to preserve expected outputs).

Dropout Rate (Hyperparameter)

- Determines the probability of zeroing out each neuron’s output.

- Common values range from 0.2 to 0.5, but it’s dataset and architecture dependent.

TensorFlow Keras Example

from tensorflow.keras.layers import Dropout

# Apply 50% dropout

dropout_layer = Dropout(rate=0.5)(hidden_layer)

Overall, dropout promotes generalization by preventing co-adaptation of neurons. It is an easy-to-use, widely adopted method for combating overfitting in deep networks.

Summary

- Dropout randomly “turns off” neurons during training.

- It is disabled at inference, ensuring consistent outputs.

- The dropout rate sets the fraction of neurons dropped (typical ranges: 0.2–0.5).

- TensorFlow (and other frameworks) make it straightforward to implement.

Batch Normalization

Batch Normalization (BatchNorm) stabilizes and accelerates neural network training by normalizing activations across the current mini-batch. This reduces internal covariate shift—i.e., shifts in input distributions over time—and often enables using larger learning rates for faster convergence.

- Operation

- Normalizes each channel to have near-zero mean and unit variance (based on the current mini-batch statistics).

- During training, BatchNorm uses batch statistics; during inference, it uses running averages of mean and variance.

- Learnable Scale & Shift

- Each BatchNorm layer introduces two trainable parameters: γ (scale) and β (shift). These parameters allow the model to adjust or “undo” normalization if needed, preserving representational power.

- TensorFlow Keras Example

from tensorflow.keras.layers import BatchNormalization

batch_norm_layer = BatchNormalization()(hidden_layer)

Overall, BatchNorm often yields faster training, improved stability, and can help networks converge in fewer epochs.

- Summary

- BatchNorm normalizes activations, reducing internal covariate shift.

- It’s used both in training (via batch statistics) and inference (via running averages).

- Introduces two learnable parameters for scaling and shifting post-normalization.

- Can allow higher learning rates and faster convergence.

Keras Custom Layers

Custom layers allow developers to extend the functionality of deep learning frameworks and tailor models to specific needs:

- Novel Research Ideas

- Integrate new algorithms or experimental methods.

- Rapidly prototype and evaluate cutting-edge techniques.

- Performance Optimization

- Fine-tune execution for specialized hardware or data structures.

- Reduce memory or computational overhead by customizing layer operations.

- Flexibility

- Define unique behaviors not covered by standard library layers.

- Experiment with unconventional architectures or parameterization.

- Reusability & Maintenance

- Bundle functionality into modular, readable components.

- Simplify debugging and collaboration by centralizing custom code.

Basics of creating a custom layers

import tensorflow as tf

from tensorflow.keras.layers import Layer

class MyCustomLayer(Layer):

def __init__(self, units=32, **kwargs):

super(MyCustomLayer, self).__init__(**kwargs)

self.units = units

def build(self, input_shape):

# Define trainable weights (e.g., kernel and bias)

self.w = self.add_weight(

shape=(input_shape[-1], self.units),

initializer='random_normal',

trainable=True

)

self.b = self.add_weight(

shape=(self.units,),

initializer='zeros',

trainable=True

)

def call(self, inputs):

# Forward pass using the custom weight parameters

return tf.matmul(inputs, self.w) + self.b

print(tf.executing_eagerly()) # Should return True in TF 2.x

a = tf.constant([1, 2, 3])

b = tf.constant([4, 5, 6])

result = tf.add(a, b)

print(result)

# Outputs: tf.Tensor([5 7 9], shape=(3,), dtype=int32)

Custom dense Layer

class CustomDenseLayer(Layer):

def __init__(self, units=32):

super(CustomDenseLayer, self).__init__()

self.units = units

def build(self, input_shape):

# Define trainable weights (e.g., kernel and bias)

self.w = self.add_weight(

shape=(input_shape[-1], self.units),

initializer='random_normal',

trainable=True

)

self.b = self.add_weight(

shape=(self.units,),

initializer='zeros',

trainable=True

)

def call(self, inputs):

# Forward pass using the custom weight parameters

return tf.nn.relu(tf.matmul(inputs, self.w) + self.b)

import tensorflow.keras.model import Sequential

model = Sequential([

CustomDenseLayer(64),

CustomDenseLayer(10)

])

model.compile(optimizer='adam', loss='categorical_crossentropy')

TensorFlow 2.x

- Eager Execution

- Executes operations immediately rather than building a static graph.

Makes TensorFlow more intuitive and Pythonic, enabling straightforward/improved debugging.

- Immediate Feedback

- Facilitates interactive programming

- Simplified Code

- High-Level APIs

- Simplifies model building via Keras, offering an approachable, layer-based API.

- User Friendly

- Modular and composable

- Extensive documentation

- Integrates seamlessly with eager execution to facilitate rapid experimentation.

- Simplifies model building via Keras, offering an approachable, layer-based API.

- Cross-Platform Support

- Compatible with various hardware backends (e.g., CPU, GPU, TPU).

- Embedded devices. (TensorFlow Lite)

- Web (TensorFlow.js)

- Production ML Pipelines (TensorFlow Extended (TFX))

- Scalability & Performance

- Optimizations under the hood handle large-scale training.

- Suited to both research prototyping and production systems.

- Rich Ecosystem

- Extends TensorFlow with additional libraries (e.g., TensorFlow Extended, TensorFlow Lite, TensorFlow.js).

- Offers a broad set of tools for data processing, visualization, and distributed training.

- TensorFlow Hub

- Repository for reusable machine learning modules.

- TensorBoard

- Visualization toolkit for TensorFlow

Convolutional Neural Networks (CNNs)

Purpose: CNNs analyze visual data by leveraging patterns in the spatial structure of images, mimicking the hierarchical structure of the human visual system.

Key Components of CNNs:

- Convolutional Layers

- Perform feature extraction.

- Learn spatial hierarchies of features through kernels (filters) applied to the input data.

- Generate feature maps by convolving the input with a set of filters.

- Pooling Layers

- Perform downsampling to reduce the spatial dimensions of feature maps.

- Types:

- Max Pooling: Retains the maximum value in a pooling window.

- Average Pooling: Retains the average value in a pooling window.

- Reduces computational complexity and prevents overfitting by acting as a form of regularization.

- Fully Connected (FC) Layers

- Flatten the high-level features from convolutional and pooling layers into a single vector.

- Use the vector for classification or regression tasks by passing it through dense layers and applying an activation function like softmax or sigmoid.

Workflow of CNNs:

- Input Image

- Raw pixel data of the image is input into the network.

- Convolutional Layers

- Extract low-level features like edges, corners, and textures. With deeper layers, higher-level features like shapes and objects are learned.

- Activation Function

- Non-linear activation functions (e.g., ReLU) are applied after convolution to introduce non-linearity and enable the network to learn complex patterns.

- Pooling Layers

- Reduce dimensionality while retaining key features. This decreases computational costs and improves model generalization.

- Fully Connected Layers

- Use learned features to make final predictions. Typically followed by a softmax or sigmoid activation for classification.

Expanded CNN Architecture Example:

- Input -> Convolution -> ReLU -> Pooling -> Convolution -> ReLU -> Pooling -> Fully Connected -> Output.

Additional Notes for Understanding:

- Padding

- Used to preserve spatial dimensions after convolution. Common padding types are:

- Valid Padding: No padding; reduces output size.

- Same Padding: Pads input so that output dimensions match the input dimensions.

- Used to preserve spatial dimensions after convolution. Common padding types are:

- Stride

- The number of pixels by which the filter moves during convolution. Larger strides reduce the spatial size of the output.

- Regularization Techniques

- Dropout: Randomly sets a fraction of input units to zero during training to prevent overfitting.

- Batch Normalization: Normalizes intermediate activations to improve stability and training speed.

- Data Augmentation

- Techniques like flipping, rotating, cropping, and scaling are used to increase the diversity of the training data and improve generalization.

- Applications of CNNs:

- Image classification.

- Object detection.

- Semantic segmentation.

- Medical imaging.

- Style transfer.

- Popular Architectures:

- LeNet: Early architecture for handwritten digit recognition.

- AlexNet: Introduced deeper networks with ReLU and dropout.

- VGGNet: Deep but simple networks with small filters.

- ResNet: Introduced residual connections to combat vanishing gradients.

- Inception: Used modules to increase depth and width efficiently.

Code Example: Basic CNN:

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Conv2D, MaxPooling2D, Flatten, Dense

# Create a Sequential model

model = Sequential([

Conv2D(32, (3, 3), activation='relu', input_shape=(64, 64, 3)), # First Conv2D layer

MaxPooling2D((2, 2)), # First MaxPooling layer

Conv2D(64, (3, 3), activation='relu'), # Second Conv2D layer

MaxPooling2D((2, 2)), # Second MaxPooling layer

Flatten(), # Flatten the feature maps

Dense(128, activation='relu'), # Fully connected dense layer

Dense(10, activation='softmax') # Output layer for 10 classes

])

# Compile the model

model.compile(optimizer='adam', # Use Adam optimizer

loss='categorical_crossentropy', # Loss function for multi-class classification

metrics=['accuracy']) # Metrics to monitor during training

# Print a summary of the model

model.summary()

- Model Explanation:

Conv2D(32, (3, 3)): Adds a convolutional layer with 32 filters of size (3x3).MaxPooling2D((2, 2)): Reduces spatial dimensions by taking the max value in a (2x2) window.Flatten(): Flattens the 2D feature maps into a 1D vector.Dense(128, activation='relu'): Fully connected layer with 128 units and ReLU activation.Dense(10, activation='softmax'): Output layer for classification into 10 classes using softmax activation.

- Input Shape:

input_shape=(64, 64, 3)assumes input images are 64x64 pixels with 3 color channels (RGB).

Advanced CNN Architectures

1. VGG (Visual Geometry Group Networks)

- Key Idea: Uniform small 3×3 convolutional filters.

- Max-Pooling Layers

- Fully Connected Layers

- Structure: Deep networks (e.g., VGG-16: 13 convolutional + 3 dense layers).

- Pros: Simplicity and depth for feature extraction.

- Cons: High computational cost.

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Conv2D, MaxPooling2D, Flatten, Dense

# Create a Sequential model

model = Sequential([

Conv2D(32, (3, 3), activation='relu', input_shape=(64, 64, 3)), # First Conv2D layer

Conv2D(32, (3, 3), activation='relu'), # Second Conv2D layer

MaxPooling2D((2, 2)), # First MaxPooling layer

Conv2D(128, (3, 3), activation='relu'), # Third Conv2D layer

Conv2D(128, (3, 3), activation='relu'), # Fourth Conv2D layer

MaxPooling2D((2, 2)), # Second MaxPooling layer

Conv2D(256, (3, 3), activation='relu'), # Fifth Conv2D layer

Conv2D(256, (3, 3), activation='relu'), # Sixth Conv2D layer

MaxPooling2D((2, 2)), # Third MaxPooling layer

Flatten(), # Flatten the feature maps

Dense(512, activation='relu'), # First Fully connected dense layer

Dense(512, activation='relu'), # Second Fully connected dense layer

Dense(10, activation='softmax') # Output layer for 10 classes

])

# Compile the model

model.compile(optimizer='adam', # Use Adam optimizer

loss='categorical_crossentropy', # Loss function for multi-class classification

metrics=['accuracy']) # Metrics to monitor during training

# Print a summary of the model

model.summary()

Explanation of Key Components:

- Conv2D Layers:

- 32,128,256 filters represent increasing feature extraction depth.

- 3×3 kernels are used consistently for feature extraction.

- MaxPooling2D Layers:

- Downsample the feature maps to reduce spatial dimensions and computational costs.

- The MaxPooling2D((2,2)) layer in a Convolutional Neural Network (CNN) is used to reduce the spatial dimensions of the feature maps while preserving important features.

- Flatten Layer:

- Converts the 3D feature maps into a 1D vector for dense layer input.

- Dense Layers:

- Two fully connected layers with 512 neurons each, followed by a softmax layer for 10-class classification.

- Compilation:

- Adam optimizer: Adaptive learning rate optimization for faster convergence.

- Categorical Crossentropy: Suitable for multi-class classification problems.

2. ResNet (Residual Networks)

- Key Idea: Residual connections (skip connections) to combat vanishing gradients.

- Structure: Residual block: Output=F(Input)+Input.

- Pros: Enables very deep networks (e.g., ResNet-50, ResNet-101).

- Cons: Increased complexity.

from tensorflow.keras.layers import Input, Conv2D, BatchNormalization, Activation, Add, MaxPooling2D, Flatten, Dense, GlobalAveragePooling2D

from tensorflow.keras.models import Model

# Residual Block

def residual_block(x, filters, kernel_size=3, stride=1):

shortcut = x

x = Conv2D(filters, kernel_size, strides=stride, padding='same')(x)

x = BatchNormalization()(x)

x = Activation('relu')(x)

x = Conv2D(filters, kernel_size, strides=1, padding='same')(x)

x = BatchNormalization()(x)

x = Add()([x, shortcut])

x = Activation('relu')(x)

return x

# ResNet-like Model

def resnet_like(input_shape=(64, 64, 3), num_classes=10):

inputs = Input(shape=input_shape)

# Initial Conv Layer

x = Conv2D(64, (7, 7), strides=2, padding='same')(inputs)

x = BatchNormalization()(x)

x = Activation('relu')(x)

x = MaxPooling2D((3, 3), strides=2, padding='same')(x)

# Residual Blocks

x = residual_block(x, 64)

x = residual_block(x, 64)

x = residual_block(x, 128, stride=2)

x = residual_block(x, 128)

x = residual_block(x, 256, stride=2)

x = residual_block(x, 256)

# Global Average Pooling and Output

x = GlobalAveragePooling2D()(x)

x = Dense(num_classes, activation='softmax')(x)

# Create Model

model = Model(inputs, x)

return model

# Create and compile the ResNet-like model

model = resnet_like()

model.compile(optimizer='adam',

loss='categorical_crossentropy',

metrics=['accuracy'])

# Print the model summary

model.summary()

3. Inception Networks

- Key Idea: Multi-scale feature extraction with filters of different sizes (e.g., 1×1, 3×3, 5×5).

- Structure: Inception modules use 1×1 bottleneck layers to reduce dimensionality.

- Pros: Efficient and versatile.

- Cons: Complex architecture design.

Data Augmentation Techniques

Purpose:

- Enhance the robustness and generalization of models.

- Artificially expand the training dataset by introducing variations.

- Reduce overfitting by exposing the model to diverse examples.

- Improve performance on unseen data by mimicking real-world variations.

Common Data Augmentation Techniques

- Rotation:

- Rotate images by a random degree within a specified range (e.g., ±30°).

- Helps the model learn invariance to orientation changes.

- Translations:

- Shift the image horizontally or vertically by a certain number of pixels.

- Useful for objects that might not always be centered in the frame.

- Flipping:

- Horizontal flipping: Mirrors the image along the vertical axis.

- Vertical flipping (less common): Mirrors the image along the horizontal axis.

- Effective for symmetrical objects or scenes.

- Scaling:

- Resize the image by a random factor, either zooming in or out.

- Helps the model learn invariance to object sizes.

- Adding Noise:

- Inject random noise (e.g., Gaussian noise, salt-and-pepper noise) to simulate variations in image quality.

- Helps the model become robust to noisy or low-quality inputs.

Other Useful Techniques:

- Shearing:

- Distort the image by slanting it along an axis.

- Simulates different perspectives or viewing angles.

- Cropping:

- Randomly crop parts of the image to simulate zoomed-in views or partial occlusions.

- Brightness, Contrast, and Color Adjustments:

- Alter the brightness, contrast, saturation, or hue of the image.

- Makes the model robust to varying lighting conditions.

- Random Erasing:

- Randomly mask out parts of the image.

- Forces the model to rely on other parts of the image for prediction.

- CutMix and MixUp:

- CutMix: Combines parts of two images and mixes their labels.

- MixUp: Mixes two images and their labels linearly.

from tensorflow.keras.preprocessing.image import ImageDataGenerator

# Create an ImageDataGenerator instance with augmentation techniques

datagen = ImageDataGenerator(

rotation_range=30, # Rotate images by up to 30 degrees

width_shift_range=0.2, # Translate images horizontally by 20% of width

height_shift_range=0.2, # Translate images vertically by 20% of height

horizontal_flip=True, # Randomly flip images horizontally

zoom_range=0.2, # Zoom in/out by up to 20%

brightness_range=[0.8, 1.2], # Adjust brightness (80% to 120%)

fill_mode='nearest' # Fill missing pixels after transformations

)

# Example: Applying augmentations to a single image

from tensorflow.keras.preprocessing.image import img_to_array, load_img

image = load_img('example.jpg', target_size=(64, 64)) # Load image

image_array = img_to_array(image) # Convert to array

image_array = image_array.reshape((1,) + image_array.shape) # Add batch dimension

# Generate augmented images

i = 0

for batch in datagen.flow(image_array, batch_size=1):

plt.figure(i)

imgplot = plt.imshow(image.array to img(batch[0]))

ImageDataGenerator augments images by By rotating, shifting, and flipping images.

The fill_mode parameter in the ImageDataGenerator class specifies how the pixels outside the boundaries of an image are handled when transformations like rotation, shifting, or zooming are applied. These transformations can result in some parts of the image being "pushed out" of the frame, leaving empty areas. The fill_mode determines how these empty areas are filled.

The featurewise_center option in ImageDataGenerator performs feature-wise mean centering on the input data. When enabled, it normalizes the dataset by subtracting the mean value of each feature (e.g., each pixel across all images in the dataset) from the corresponding feature value in the images. (To set the mean of the dataset to 0)

Feature-Wise Normalization

- Definition: Normalize the entire dataset feature-by-feature, based on the dataset's mean and standard deviation.

- Use Case: Ensures all features have similar ranges, which helps models converge faster during training.

- How It Works:

- Compute the mean and standard deviation for each feature (e.g., pixel intensity) across all images in the dataset.

- Subtract the mean and divide by the standard deviation for each feature.

datagen = ImageDataGenerator(featurewise_center=True, featurewise_std_normalization=True)

# Fit the generator on the dataset to compute statistics

datagen.fit(training_data) # training_data is a NumPy array of images

Sample-Wise Normalization

- Definition: Normalize each individual sample (image) independently by subtracting the sample's mean and dividing by its standard deviation.

- Use Case: Useful when you want to normalize each image separately, especially if the dataset contains images with different intensity distributions.

- How It Works:

- For each image, compute its mean and standard deviation.

- Normalize the image by subtracting its mean and dividing by its standard deviation.

datagen = ImageDataGenerator(samplewise_center=True, samplewise_std_normalization=True)

# Use the generator as part of the training process

datagen.flow(training_data, training_labels)

ImageDataGenerator.fit(training_images)

i = 0

for batch in ImageDataGenerator.flow(training_images, batch_size=32):

plt.figure(i)

imgplot = plt.imshow(image.array_ti_image(batch[0]))

i += 1

if i % 4 == 0

break

plt.show()

Custom Augmentation Functions

- Definition: Define your own augmentation logic when the built-in transformations are insufficient or you need specialized augmentations.

- How It Works:

- Write a custom function that modifies the input data (e.g., applying specific transformations or injecting custom noise).

- Pass the function as part of a data preprocessing pipeline.

import numpy as np

# Define a custom function to add random noise

def add_noise(image):

noise = np.random.normal(loc=0, scale=0.1, size=image.shape) # Gaussian noise

return np.clip(image + noise, 0, 1) # Ensure pixel values remain in [0, 1]

# Create a DataGenerator with a custom preprocessing function

datagen = ImageDataGenerator(preprocessing_function=add_noise)

# Use the generator

i = 0

for batch in datagen.flow(training_images, batch_size=32):

plt.figure(i)

imgplot = plt.imshow(image.array_ti_image(batch[0]))

i += 1

if i % 4 == 0

break

plt.show()

Transfer Learning in Keras

import sys

import os

import numpy as np

from tensorflow.keras.applications import VGG16

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense, Flatten

from tensorflow.keras.preprocessing.image import ImageDataGenerator

from PIL import Image

# Increase recursion limit (temporary solution for deep networks)

sys.setrecursionlimit(1000)

# Ensure the dataset directory exists and generate sample data if needed

def generate_sample_data():

os.makedirs('training_data/class_1', exist_ok=True)

os.makedirs('training_data/class_2', exist_ok=True)

for i in range(10):

img = Image.fromarray(np.random.randint(0, 255, (224, 224, 3), dtype=np.uint8))

img.save(f'training_data/class_1/img_{i}.jpg')

img.save(f'training_data/class_2/img_{i}.jpg')

# Generate sample data (Uncomment if needed)

# generate_sample_data()

# Load the VGG16 model pre-trained on ImageNet (without the top layers)

base_model = VGG16(weights='imagenet', include_top=False, input_shape=(224, 224, 3))

# What include_top=False Does

# Removes the Fully Connected (Dense) Layers from the original pretrained model.

# Keeps only the Convolutional Base (Feature Extractor).

# Allows Customization by letting you add your own fully connected layers for classification.

# Freeze the base model layers

for layer in base_model.layers:

layer.trainable = False

# Create a new model and add the base model and new layers

model = Sequential([

base_model,

Flatten(),

Dense(256, activation='relu'),

Dense(1, activation='sigmoid') # Change this for multi-class classification

])

# Compile the model

model.compile(optimizer='adam', loss='binary_crossentropy', metrics=['accuracy'])

# Print model summary

model.summary()

# Load and preprocess the dataset

train_datagen = ImageDataGenerator(rescale=1./255)

train_generator = train_datagen.flow_from_directory(

'training_data',

target_size=(224, 224),

batch_size=32,

class_mode='binary' # Fixed syntax error here (was `''binary``, now `'binary'`)

)

# Train the model

model.fit(train_generator, epochs=10) # Fixed `epoch=10` to `epochs=10`

Fine-Tuning the Pre-trained Model VGG16

# unfreeze the top layers of the base model

for layer in base_model.layers[-4:]:

layer.trainable = True

# Compile the model again

model.compile(optimizer='adam', loss='binary_crossentropy', metrics=['accuracy'])

# Train the model again

model.fit(train_generator, epochs=10) # Fixed `epoch=10` to `epochs=10`

Using Pre-trained models

Using a pre-trained model for feature extraction.

import os

import shutil

from PIL import Image

import numpy as np

# Define the base directory for sample data

base_dir = 'sample_data'

class1_dir = os.path.join(base_dir, 'class1')

class2_dir = os.path.join(base_dir, 'class2')

# Create directories for two classes

os.makedirs(class1_dir, exist_ok=True)

os.makedirs(class2_dir, exist_ok=True)

# Function to generate and save random images

def generate_random_images(save_dir, num_images):

for i in range(num_images):

# Generate a random RGB image of size 224x224

img_array = np.random.randint(0, 255, (224, 224, 3), dtype=np.uint8)

img = Image.fromarray(img_array)

img.save(os.path.join(save_dir, f"img_{i}.jpg"))

# Generate sample data for class1 and class2

generate_random_images(class1_dir, 100)

generate_random_images(class2_dir, 100)

print("Sample images generated successfully!")

Example: Pretrained model for extracting features

from tensorflow.keras.applications import VGG16

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense, Flatten

from tensorflow.keras.preprocessing.image import ImageDataGenerator

from tensorflow.keras.optimizers import Adam

# Load the VGG16 model pre-trained on ImageNet

base_model = VGG16(weights='imagenet', include_top=False, input_shape=(224, 224, 3))

# Freeze all layers initially

for layer in base_model.layers:

layer.trainable = False

# Create a new model and add the base model and new layers

model = Sequential([

base_model,

Flatten(),

Dense(256, activation='relu'),

Dense(1, activation='sigmoid') # Change for multi-class classification if needed

])

# Compile the model

model.compile(optimizer=Adam(learning_rate=0.001),

loss='binary_crossentropy',

metrics=['accuracy'])

train_datagen = ImageDataGenerator(rescale=1./255)

train_generator = train_datagen.flow_from_directory(

'/content/sample_data', # Update with your dataset path

target_size=(224, 224),

batch_size=32,

class_mode='binary' # Use 'categorical' for multi-class classification

)

# Train the model with frozen layers

model.fit(train_generator, epochs=10)

# Gradually unfreeze layers and fine-tune

for layer in base_model.layers[-4:]: # Unfreeze the last 4 layers

layer.trainable = True

# Compile the model again with a lower learning rate for fine-tuning

model.compile(optimizer=Adam(learning_rate=0.0001),

loss='binary_crossentropy',

metrics=['accuracy'])

# Train the model again for fine-tuning

model.fit(train_generator, epochs=10) # Fine-tune for additional epochs

# Modify data generator to include validation data

train_datagen = ImageDataGenerator(rescale=1./255, validation_split=0.2)

train_generator = train_datagen.flow_from_directory(

'sample_data',

target_size=(224, 224),

batch_size=32,

class_mode='binary',

subset='training'

)

validation_generator = train_datagen.flow_from_directory(

'sample_data',

target_size=(224, 224),

batch_size=32,

class_mode='binary',

subset='validation'

)

# Train the model with validation data

history = model.fit(train_generator, epochs=10, validation_data=validation_generator)

# Plot training and validation loss

plt.plot(history.history['loss'], label='Training Loss')

plt.plot(history.history['val_loss'], label='Validation Loss')

plt.title('Training and Validation Loss')

plt.xlabel('Epochs')

plt.ylabel('Loss')

plt.legend()

plt.show()

TensorFlow

Image manipulation tasks

classification

data augmentation

import tensorflow as tf

from tensorflow.keras.preprocessing.image import ImageDataGenerator, img_to_array, load_img

# Load and preprocess the image

img = load_img('/content/path_to_image.jpg', target_size=(224, 224)) # Load image with resizing

img_array = img_to_array(img) # Convert image to NumPy array

img_array = tf.expand_dims(img_array, 0) # Add batch dimension

# Display the original image

import matplotlib.pyplot as plt

plt.imshow(img) # Show image

plt.show()

Data Augmentation techniques

from tensorflow.keras.preprocessing.image import ImageDataGenerator, img_to_array, load_img

import matplotlib.pyplot as plt

# Define ImageDataGenerator with augmentation parameters

datagen = ImageDataGenerator(

rotation_range=40, # Rotate images up to 40 degrees

width_shift_range=0.2, # Shift horizontally by 20% of width

height_shift_range=0.2, # Shift vertically by 20% of height

shear_range=0.2, # Shear transformation

zoom_range=0.2, # Zoom in/out by 20%

horizontal_flip=True, # Flip images horizontally

fill_mode='nearest' # Fill missing pixels with nearest values

)

# Load the image

img = load_img('/content/path_to_image.jpg') # Update with actual image path

x = img_to_array(img) # Convert image to array

x = x.reshape((1,) + x.shape) # Add batch dimension

# Generate augmented images and display them

i = 0

for batch in datagen.flow(x, batch_size=1):

plt.figure(i)

imgplot = plt.imshow(tf.keras.preprocessing.image.array_to_img(batch[0])) # Convert back to image format

i += 1

if i % 4 == 0:

break

plt.show()

Transpose Convolution

Transpose Convolution (Deconvolution) in Deep Learning

Transpose convolution (also called deconvolution or up-convolution) is used in deep learning to increase the spatial dimensions of a feature map. It is commonly used in tasks like:

✅ Image Generation (e.g., GANs - Generative Adversarial Networks)

✅ Super-Resolution (enhancing image resolution)

✅ Semantic Segmentation (assigning pixel-wise labels to images, e.g., U-Net)

How Transpose Convolution Works

- Inserts zeros between input pixels

- Expands the input feature map without learning new information.

- Applies a standard convolution operation

- Uses a kernel (filter) to learn features and generate an upsampled output.

- Upsamples the feature map

- The output size is larger than the input size, increasing spatial resolution.

Comparison: Normal vs. Transpose Convolution

| Convolution (Downsampling) | Transpose Convolution (Upsampling) |

|---|---|

| Reduces spatial dimensions | Increases spatial dimensions |

| Extracts features | Reconstructs spatial details |

| Used in CNN encoder (feature extraction) | Used in CNN decoder (image reconstruction) |

Use Cases of Transpose Convolution

✅ Generative Adversarial Networks (GANs)

- Used in DCGANs to generate high-resolution images. ✅ Super-Resolution (SRGANs, ESRGANs)

- Enhances the quality of low-resolution images. ✅ Semantic Segmentation (U-Net, DeepLabV3+)

- Converts feature maps back to full-resolution pixel-wise masks. ✅ Autoencoders (Variational Autoencoders - VAEs)

- Used in decoder networks to reconstruct images.

import os

import logging

import tensorflow as tf

from tensorflow.keras.models import Model

from tensorflow.keras.layers import Input, Conv2DTranspose

# Set environment variables to suppress TensorFlow warnings

os.environ['TF_CPP_MIN_LOG_LEVEL'] = '3' # Ignore INFO, WARNING, and ERROR messages

os.environ['TF_ENABLE_ONEDNN_OPTS'] = '0' # Turn off oneDNN custom operations

# Use logging to suppress TensorFlow warnings

logging.getLogger('tensorflow').setLevel(logging.ERROR)

# Define the input layer

input_layer = Input(shape=(28, 28, 1)) # Example: grayscale image input

# Add a transpose convolution layer (upsampling)

transpose_conv_layer = Conv2DTranspose(

filters=32,

kernel_size=(3, 3),

strides=(2, 2), # Upsamples the spatial dimensions

padding='same',

activation='relu'

)(input_layer)

# Define the output layer (final upsampling)

output_layer = Conv2DTranspose(

filters=1,

kernel_size=(3, 3),

activation='sigmoid',

padding='same'

)(transpose_conv_layer)

# Create the model

model = Model(inputs=input_layer, outputs=output_layer)

# Compile the model

model.compile(optimizer='adam', loss='mean_squared_error', metrics=['accuracy'])

# Print model summary

model.summary()

Issues with Transpose Convolution & How to Mitigate Them

When using Transpose Convolution (Conv2DTranspose), certain artifacts and issues can occur, particularly checkerboard artifacts caused by uneven kernel overlaps.

Issues in Transpose Convolution

- Checkerboard Artifacts

- Occur due to uneven overlap when applying the transposed convolution.

- Some pixels receive more updates than others, leading to an uneven pixel distribution.

- Common in image generation (GANs), segmentation (U-Net), and super-resolution.

- Uneven Overlap of Convolution Kernels

- When using large stride values, some pixels are affected more frequently than others.

- This leads to distortions in the generated images.

How to Mitigate These Issues

✅ 1. Use Bilinear Upsampling Instead of Transpose Convolution

- Apply bilinear interpolation first, then apply a regular convolution (

Conv2D) to refine features.

import os

import logging

import tensorflow as tf

from tensorflow.keras.models import Model

from tensorflow.keras.layers import Input, UpSampling2D, Conv2D

# Suppress TensorFlow warnings

os.environ['TF_CPP_MIN_LOG_LEVEL'] = '3' # Ignore INFO, WARNING, and ERROR messages

os.environ['TF_ENABLE_ONEDNN_OPTS'] = '0' # Turn off oneDNN custom operations

logging.getLogger('tensorflow').setLevel(logging.ERROR)

# Define the input layer

input_layer = Input(shape=(28, 28, 1)) # Example: grayscale input

# Apply upsampling followed by a convolution to prevent checkerboard artifacts

x = UpSampling2D(size=(2, 2))(input_layer) # Upsample by a factor of 2

output_layer = Conv2D(

filters=64,

kernel_size=(3, 3),

padding='same',

activation='relu' # Add ReLU for feature extraction

)(x)

# Create the model

model = Model(inputs=input_layer, outputs=output_layer)

# Compile the model

model.compile(optimizer='adam', loss='mean_squared_error', metrics=['accuracy'])

# Print model summary

model.summary()

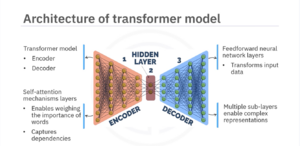

Transformers

- Used in image processing and time-series forecasting.

- Introduced in "Attention Is All You Need" (Vaswani et al., 2017).

- Self-attention mechanism enables parallel processing of input data.

- Captures long-range dependencies more effectively than RNNs.

- Forms the backbone of models like ViTs (Vision Transformers) and GPT.

- Encoder-Decoder Architecture

- Encoder: Processes input sequences, extracting contextual embeddings.

- Decoder: Generates output sequences, attending to encoder outputs.

- Key Components

- Self-Attention Layers: Assign importance to words/tokens, capturing dependencies.

- Feedforward Layers: Transform input embeddings through dense layers.

- Positional Encoding: Adds order awareness to input sequences.

- Multi-Head Attention: Captures diverse contextual relationships.

- Multiple Sub-Layers

- Each encoder/decoder block contains self-attention + feedforward layers, enabling complex representations.

Self-attention component

- Allows each input word to attend to all other words, capturing global context.

- Uses Query (Q), Key (K), and Value (V) matrices for attention computation.

- Steps:

- Compute attention scores: Dot product of Q and K.

- Scale scores: Divide by dk to stabilize gradients.

- Apply Softmax: Converts scores into attention weights.

- Weight values (V) using attention scores to get final representation.

- Enables parallel processing and better long-range dependencies than RNNs.

Code example: Self-attention calculation

import tensorflow as tf from tensorflow.keras.layers import Layer

class SelfAttention(Layer):

def init(self, d_model): super(SelfAttention, self).init() self.d_model = d_model

self.query_dense = tf.keras.layers.Dense(d_model)

self.key_dense = tf.keras.layers.Dense(d_model)

self.value_dense = tf.keras.layers.Dense(d_model)

def call(self, inputs):

q = self.query_dense(inputs)

k = self.key_dense(inputs)

v = self.value_dense(inputs)

attention_weights = tf.nn.softmax(tf.matmul(q, k, transpose_b=True) /

tf.math.sqrt(tf.cast(self.d_model, tf.float32)), axis=-1)

output = tf.matmul(attention_weights, v)

return output

# Example usage

inputs = tf.random.uniform((1, 60, 512)) # Batch size of 1, sequence length of 60, and model dimension of 512

self_attention = SelfAttention(d_model=512)

output = self_attention(inputs)

print(output.shape) # Should print (1, 60, 512)

Transformer Encoder

✔ Composed of multiple stacked layers to process input sequences efficiently.

✔ Key Components:

- Self-Attention Mechanism → Enables each token to attend to all others, capturing dependencies.

- Feedforward Neural Network → Applies transformations after self-attention for deeper feature extraction.

- Residual Connections → Helps prevent vanishing gradients and stabilizes training.

- Layer Normalization → Normalizes activations, improving convergence.

- Positional Encoding → Adds sequence order information to input embeddings.

✔ Process Flow:

- Input embedding → Converts tokens into vector representations.

- Positional Encoding → Injects position-related information.

- Self-Attention → Computes contextual relationships.

- Feedforward Layers → Applies learned transformations.

- Normalization & Residuals → Ensure stability and better gradient flow.

# Code example: Transformer encoder

import tensorflow as tf

from tensorflow.keras.layers import Layer, MultiHeadAttention, Dense, LayerNormalization, Dropout

import numpy as np

# Positional Encoding Layer

class PositionalEncoding(Layer):

def __init__(self, max_length, d_model):

super(PositionalEncoding, self).__init__()

self.pos_encoding = self.positional_encoding(max_length, d_model)

def positional_encoding(self, max_length, d_model):

positions = np.arange(max_length)[:, np.newaxis] # Shape: (max_length, 1)

i = np.arange(d_model)[np.newaxis, :] # Shape: (1, d_model)

angle_rates = 1 / np.power(10000, (2 * (i // 2)) / np.float32(d_model))

angle_rads = positions * angle_rates

angle_rads[:, 0::2] = np.sin(angle_rads[:, 0::2]) # Apply sin to even indices

angle_rads[:, 1::2] = np.cos(angle_rads[:, 1::2]) # Apply cos to odd indices

return tf.cast(angle_rads[np.newaxis, ...], dtype=tf.float32) # Add batch dimension

def call(self, x):

return x + self.pos_encoding[:, :tf.shape(x)[1], :]

# Transformer Encoder Layer

class TransformerEncoder(Layer):

def __init__(self, d_model, num_heads, dff, max_length, rate=0.1):

super(TransformerEncoder, self).__init__()

self.pos_encoding = PositionalEncoding(max_length, d_model) # Add positional encoding

self.mha = MultiHeadAttention(num_heads=num_heads, key_dim=d_model)

self.ffn = tf.keras.Sequential([

Dense(dff, activation='relu'), # Expand feature space

Dense(d_model) # Project back to original size

])

self.layernorm1 = LayerNormalization(epsilon=1e-6)

self.layernorm2 = LayerNormalization(epsilon=1e-6)

self.dropout1 = Dropout(rate)

self.dropout2 = Dropout(rate)

def call(self, x, training, mask=None):

x = self.pos_encoding(x) # Add positional encoding

attn_output = self.mha(x, x, x, attention_mask=mask) # Self-attention

attn_output = self.dropout1(attn_output, training=training)

out1 = self.layernorm1(x + attn_output) # Residual + Norm

ffn_output = self.ffn(out1) # Feedforward Network

ffn_output = self.dropout2(ffn_output, training=training)

out2 = self.layernorm2(out1 + ffn_output) # Residual + Norm

return out2 # Output of Encoder Layer

# Example Usage

d_model = 512

num_heads = 8

dff = 2048

max_length = 60 # Max sequence length

encoder = TransformerEncoder(d_model=d_model, num_heads=num_heads, dff=dff, max_length=max_length)

x = tf.random.uniform((1, max_length, d_model)) # Batch of 1, 60 tokens, 512 dimensions

mask = None

output = encoder(x, training=True, mask=mask)

print(output.shape) # Expected: (1, 60, 512)

Transformer decoder

✔ Generates sequences based on context from the encoder.

✔ Cross-Attention Mechanism → Attends to encoder outputs while generating target sequences.

✔ Takes Target Sequence as Input → Uses previously generated tokens to predict the next.

Key Components:

- Self-Attention → Allows each token to attend to previous tokens in the target sequence.

- Cross-Attention → Attends to encoder outputs for context.

- Feedforward Neural Network → Transforms attention outputs into meaningful representations.

- Masked Self-Attention → Ensures predictions depend only on past tokens (prevents peeking).

✔ Process Flow:

- Receives encoder output + target sequence.

- Self-attention captures relationships within the target sequence.

- Cross-attention aligns the generated sequence with encoder outputs.

- Feedforward layers refine the representation.

- Outputs probabilities for the next token in the sequence.

import tensorflow as tf

from tensorflow.keras.layers import Layer, MultiHeadAttention, Dense, LayerNormalization, Dropout

# Transformer Decoder Layer

class TransformerDecoder(Layer):

def __init__(self, d_model, num_heads, dff, rate=0.1):

super(TransformerDecoder, self).__init__()

# Self-attention for target sequence

self.mha1 = MultiHeadAttention(num_heads=num_heads, key_dim=d_model)

# Cross-attention with encoder's output

self.mha2 = MultiHeadAttention(num_heads=num_heads, key_dim=d_model)

# Feedforward network

self.ffn = tf.keras.Sequential([

Dense(dff, activation='relu'), # Expand feature space

Dense(d_model) # Project back to original size

])

# Layer normalization and dropout layers

self.layernorm1 = LayerNormalization(epsilon=1e-6)

self.layernorm2 = LayerNormalization(epsilon=1e-6)

self.layernorm3 = LayerNormalization(epsilon=1e-6)

self.dropout1 = Dropout(rate)

self.dropout2 = Dropout(rate)

self.dropout3 = Dropout(rate)

def call(self, x, encoder_output, training, look_ahead_mask=None, padding_mask=None):

"""

x: Target sequence input

encoder_output: Output from Transformer Encoder

look_ahead_mask: Ensures decoder only attends to previous positions

padding_mask: Masks padded positions in the input

"""

# Self-attention with masking

attn1 = self.mha1(x, x, x, attention_mask=look_ahead_mask)

attn1 = self.dropout1(attn1, training=training)

out1 = self.layernorm1(attn1 + x) # Residual connection + normalization

# Cross-attention with encoder output

attn2 = self.mha2(out1, encoder_output, encoder_output, attention_mask=padding_mask)

attn2 = self.dropout2(attn2, training=training)

out2 = self.layernorm2(attn2 + out1) # Residual connection + normalization

# Feedforward network

ffn_output = self.ffn(out2)

ffn_output = self.dropout3(ffn_output, training=training)

out3 = self.layernorm3(ffn_output + out2) # Residual connection + normalization

return out3 # Output of decoder layer

# Example usage

d_model = 512

num_heads = 8

dff = 2048

max_length = 60

decoder = TransformerDecoder(d_model=d_model, num_heads=num_heads, dff=dff)

x = tf.random.uniform((1, max_length, d_model)) # Batch of 1, 60 tokens, 512 dimensions

encoder_output = tf.random.uniform((1, max_length, d_model)) # Simulated encoder output

look_ahead_mask = None # Typically generated dynamically

padding_mask = None

output = decoder(x, encoder_output, training=True, look_ahead_mask=look_ahead_mask, padding_mask=padding_mask)

print(output.shape) # Expected: (1, 60, 512)

Transformers for sequential data

✔ Defined by order and dependencies → Each element depends on previous elements.

✔ Examples:

- Natural Language Text → Words depend on context from previous words.

- Time-Series Data → Stock prices, weather, and sensor data rely on past values.

✔ Common Models for Sequential Data:

- RNNs (Recurrent Neural Networks) → Handle short-term dependencies.

- LSTMs & GRUs → Manage long-term dependencies via gating mechanisms.

- Transformers → Use self-attention to capture dependencies without recurrence.

Handling of sequential data by transformers ✔ Uses Self-Attention Mechanisms → Captures dependencies without recurrence.

✔ Handles Long-Range Dependencies → Unlike RNNs/LSTMs, Transformers don't suffer from vanishing gradients.

✔ Supports Efficient Parallelization → Processes entire sequences simultaneously, unlike sequential RNNs.

✔ Applications:

- Natural Language Processing (NLP) → Machine translation, text generation (GPT, BERT).

- Time-Series Forecasting → Stock predictions, demand forecasting, anomaly detection.

Building the Transformer Encoder:

import tensorflow as tf

from tensorflow.keras.layers import Layer, Dense, LayerNormalization, Dropout

class MultiHeadSelfAttention(Layer):

def __init__(self, embed_dim, num_heads=8):

super(MultiHeadSelfAttention, self).__init__()

self.embed_dim = embed_dim

self.num_heads = num_heads

self.projection_dim = embed_dim // num_heads

self.query_dense = Dense(embed_dim)

self.key_dense = Dense(embed_dim)

self.value_dense = Dense(embed_dim)

self.combine_heads = Dense(embed_dim)

def attention(self, query, key, value):

score = tf.matmul(query, key, transpose_b=True)

dim_key = tf.cast(tf.shape(key)[-1], tf.float32)

scaled_score = score / tf.math.sqrt(dim_key)

weights = tf.nn.softmax(scaled_score, axis=-1)

output = tf.matmul(weights, value)

return output, weights

def split_heads(self, x, batch_size):

x = tf.reshape(x, (batch_size, -1, self.num_heads, self.projection_dim))

return tf.transpose(x, perm=[0, 2, 1, 3])

def call(self, inputs):

batch_size = tf.shape(inputs)[0]

query = self.query_dense(inputs)

key = self.key_dense(inputs)

value = self.value_dense(inputs)

query = self.split_heads(query, batch_size)

key = self.split_heads(key, batch_size)

value = self.split_heads(value, batch_size)

attention, _ = self.attention(query, key, value)

attention = tf.transpose(attention, perm=[0, 2, 1, 3])

concat_attention = tf.reshape(attention, (batch_size, -1, self.embed_dim))

output = self.combine_heads(concat_attention)

return output

class TransformerBlock(Layer):

def __init__(self, embed_dim, num_heads, ff_dim, rate=0.1):

super(TransformerBlock, self).__init__()

self.att = MultiHeadSelfAttention(embed_dim, num_heads)

self.ffn = tf.keras.Sequential([

Dense(ff_dim, activation="relu"),

Dense(embed_dim),

])

self.layernorm1 = LayerNormalization(epsilon=1e-6)

self.layernorm2 = LayerNormalization(epsilon=1e-6)

self.dropout1 = Dropout(rate)

self.dropout2 = Dropout(rate)

def call(self, inputs, training):

attn_output = self.att(inputs)

attn_output = self.dropout1(attn_output, training=training)

out1 = self.layernorm1(inputs + attn_output)

ffn_output = self.ffn(out1)

ffn_output = self.dropout2(ffn_output, training=training)

return self.layernorm2(out1 + ffn_output)

class TransformerEncoder(Layer):

def __init__(self, num_layers, embed_dim, num_heads, ff_dim, rate=0.1):

super(TransformerEncoder, self).__init__()

self.num_layers = num_layers

self.embed_dim = embed_dim

self.enc_layers = [TransformerBlock(embed_dim, num_heads, ff_dim, rate) for _ in range(num_layers)]

self.dropout = Dropout(rate)

def call(self, inputs, training=False):

x = inputs

for i in range(self.num_layers):

x = self.enc_layers[i](x, training=training)

return x

# Example usage

embed_dim = 128

num_heads = 8

ff_dim = 512

num_layers = 4

transformer_encoder = TransformerEncoder(num_layers, embed_dim, num_heads, ff_dim)

inputs = tf.random.uniform((1, 100, embed_dim))

outputs = transformer_encoder(inputs, training=False) # Use keyword argument for 'training'

print(outputs.shape) # Should print (1, 100, 128)

Advanced Transformer Applications

Used in

✔ Used in Computer Vision → Processes images without CNNs, leveraging self-attention.

✔ Vision Transformers (ViTs)

- Divides images into patches → Treats them as a sequence of tokens (like words in NLP).

- Applies Transformer architecture → Captures long-range dependencies across patches.

- Outperforms CNNs on large datasets (e.g., ImageNet).

✔ Other Vision Transformer Variants

- Swin Transformer → Uses hierarchical feature maps with shifted windows.

- DETR (Detection Transformer) → Object detection using end-to-end attention.

import tensorflow as tf

from tensorflow.keras.layers import Layer, Dense, LayerNormalization, Dropout, MultiHeadAttention, Conv2D, Reshape, Flatten

from tensorflow.keras.models import Model

# ==========================================

# 🔹 Patch Embedding Layer (Converts Images to Patches)

# ==========================================

class PatchEmbedding(Layer):

def __init__(self, img_size, patch_size, embedding_dim):

"""

Converts an image into a sequence of patches and embeds them.

Args:

- img_size (int): Size of the input image (assumes square images).

- patch_size (int): Size of each square patch.

- embedding_dim (int): Dimensionality of the patch embeddings.

"""

super(PatchEmbedding, self).__init__()

self.num_patches = (img_size // patch_size) ** 2 # Calculate number of patches

self.embedding_dim = embedding_dim

# Convolutional layer extracts patches and embeds them

self.projection = Conv2D(filters=embedding_dim,

kernel_size=patch_size,

strides=patch_size,

padding='valid')

# Reshape patches into a sequence

self.flatten = Reshape((self.num_patches, embedding_dim))

def call(self, images):

"""

Forward pass: Extract patches and embed them.

Args:

- images (Tensor): Shape (batch_size, img_size, img_size, channels).

Returns:

- Tensor of shape (batch_size, num_patches, embedding_dim).

"""

patches = self.projection(images) # Extract and embed patches

return self.flatten(patches) # Flatten to sequence

# ==========================================

# 🔹 Transformer Block (Self-Attention + Feedforward)

# ==========================================

class TransformerBlock(Layer):

def __init__(self, embed_dim, num_heads, ff_dim, rate=0.1):

"""

Transformer Encoder Block for Self-Attention and Feedforward Network.

Args:

- embed_dim (int): Dimension of the input embeddings.

- num_heads (int): Number of attention heads.

- ff_dim (int): Dimension of the feedforward network.

- rate (float): Dropout rate.

"""

super(TransformerBlock, self).__init__()

# Multi-head self-attention mechanism

self.att = MultiHeadAttention(num_heads=num_heads, key_dim=embed_dim)

# Feedforward network (expansion + projection)

self.ffn = tf.keras.Sequential([

Dense(ff_dim, activation="relu"), # Expand feature space

Dense(embed_dim), # Project back to original size

])

# Layer normalization and dropout layers

self.layernorm1 = LayerNormalization(epsilon=1e-6)

self.layernorm2 = LayerNormalization(epsilon=1e-6)

self.dropout1 = Dropout(rate)

self.dropout2 = Dropout(rate)

def call(self, inputs, training, mask=None):

"""

Forward pass for the Transformer block.

Args:

- inputs (Tensor): Input tensor of shape (batch_size, num_patches, embed_dim).

- training (bool): Whether the model is in training mode.

- mask (Tensor, optional): Attention mask.

Returns:

- Tensor with the same shape as input (batch_size, num_patches, embed_dim).

"""

# Apply self-attention

attn_output = self.att(inputs, inputs, inputs, attention_mask=mask)

attn_output = self.dropout1(attn_output, training=training)

out1 = self.layernorm1(inputs + attn_output) # Residual connection + normalization

# Apply feedforward network

ffn_output = self.ffn(out1)

ffn_output = self.dropout2(ffn_output, training=training)

return self.layernorm2(out1 + ffn_output) # Residual connection + normalization

# ==========================================

# 🔹 Patch Extraction Function

# ==========================================

def extract_patches(self, images):

"""

Extracts non-overlapping patches from the input image.

Args:

- images (Tensor): Input image tensor of shape (batch_size, img_size, img_size, channels).

Returns:

- Tensor of shape (batch_size, num_patches, patch_size * patch_size * channels).

"""

batch_size = tf.shape(images)[0] # Get batch size dynamically

# Extract fixed-size patches using TensorFlow's built-in function

patches = tf.image.extract_patches(

images=images, # Input image tensor

sizes=[1, 16, 16, 1], # Patch size (16x16)

strides=[1, 16, 16, 1], # Move 16 pixels each step (non-overlapping patches)

rates=[1, 1, 1, 1], # No dilation (standard patches)

padding='VALID' # No padding applied

)

# Reshape patches into sequence format

patches = tf.reshape(patches, [batch_size, -1, 16 * 16 * 3])

return patches # Returns extracted patches as a sequence

# ==========================================

# 🔹 Vision Transformer Model

# ==========================================

class VisionTransformer(Model):

def __init__(self, img_size, patch_size, embedding_dim, num_heads, ff_dim, num_layers, num_classes):

"""

Vision Transformer (ViT) model.

Args:

- img_size (int): Size of the input image (assumes square images).

- patch_size (int): Size of each patch.

- embedding_dim (int): Dimensionality of patch embeddings.

- num_heads (int): Number of attention heads.

- ff_dim (int): Feedforward layer dimension.

- num_layers (int): Number of Transformer blocks.

- num_classes (int): Number of output classes.

"""

super(VisionTransformer, self).__init__()

# Patch embedding layer (Converts images into patch embeddings)

self.patch_embed = PatchEmbedding(img_size, patch_size, embedding_dim)

# Stack multiple Transformer blocks

self.transformer_layers = [TransformerBlock(embedding_dim, num_heads, ff_dim) for _ in range(num_layers)]

# Classification head

self.flatten = Flatten()

self.dense = Dense(num_classes, activation='softmax')

def call(self, images, training):

"""

Forward pass for the Vision Transformer model.

Args:

- images (Tensor): Input image tensor of shape (batch_size, img_size, img_size, channels).

- training (bool): Indicates whether the model is in training mode.

Returns:

- Tensor of shape (batch_size, num_classes) with class probabilities.

"""

patches = self.patch_embed(images) # Convert image to patches

for transformer_layer in self.transformer_layers:

patches = transformer_layer(patches, training=training) # Apply Transformer blocks

x = self.flatten(patches) # Flatten to feed into classification head

return self.dense(x) # Output class probabilities

# ==========================================

# 🔹 Example Usage: Vision Transformer

# ==========================================

num_patches = 196 # Assuming 14x14 patches

embedding_dim = 128

num_heads = 4

ff_dim = 512

num_layers = 6

num_classes = 10 # For CIFAR-10 dataset

# Instantiate Vision Transformer model

vit = VisionTransformer(img_size=224, patch_size=16, embedding_dim=embedding_dim,

num_heads=num_heads, ff_dim=ff_dim, num_layers=num_layers, num_classes=num_classes)

# Generate a batch of random input images (batch_size=32, image_size=224x224, 3 color channels)

images = tf.random.uniform((32, 224, 224, 3)) # Batch of 32 images of size 224x224

# Forward pass through the Vision Transformer model

output = vit(images)

# Print the shape of the model output (should be (32, 10) for 10-class classification)

print(output.shape) # Expected output: (32, 10)

Speech recognition